The previous post I made about the VLDB GraphLab paper reminded me that the process of writing that paper was the first (and last) time I actually got drunk. An unusual stream of consciousness then led to me recalling the Drinking Philosophers Problem (Chandy, Misra 1984).

Most people when encounting parallelism and locking for the first time, would have heard of the Dining Philosophers Problem.

There are a few philosophers sitting around a table with forks between them. Each philosopher needs 2 forks to eat. (I have also heard a chopstick variant which really makes a lot more sense). The philosophers have a finite appetite and will only eat for a limited time before having to put the forks down. Though they may get hungry again later. How do you coordinate the philosophers so that every philosopher will always eventually get to eat? For instance, if every philospher picks up their left fork at the same time and wait for the right fork, no one will ever get to eat.

Resource Ordering

The typical solution most people are aware of is to have one of the philosophers pick up his/her right fork, before the left fork. More generally, this means to put an ordering on the forks; for instance we assign each fork a unique number. Then each philosopher picks up the fork with the lower number, then the fork with the higher number.

In this example here, everyone picks up their left fork first, except for the philosopher on the bottom left who pickes up his right fork first.

This resource ordering solution is the standard solution to managing lock order in parallel programming: that is to have everyone acquire locks in the same order.

To use a toy example of a concurrent bidirectional dictionary. Internally it may be made up two dictionaries, one in the forward and one in the backward direction.

forward_map = {}

backward_map = {}

forward_map_lock = Lock()

backward_map_lock = Lock()

def forward_lookup(k1):

with forward_map_lock:

if k1 in forward_map:

return forward_map[k1]

return None

def backward_lookup(k2):

with backward_map_lock:

if k2 in backward_map.contains:

return backward_map[k2]

return None

def insert(k1, k2):

with backward_map_lock:

with forward_map_lock:

forward_map[k1] = k2

backward_map[k2] = k1

Note that we have acquired backward_map_lock before forward_map_lock in insert. Also, observe that the lookup methods do not require both locks, but only one of them.

Now if we implement a remove method that given a key k1, delete its forward/backward entry. Ideally we would like to acquire the forward lock first to check if the key exists, then acquire the backward lock to complete the deletion. However due to the lock ordering, we must acquire the lock in the same order as insert. Acquiring the lock in the opposite direction will lead to deadlocks.

def remove(k1):

with backward_map_lock:

with forward_map_lock:

if k1 in forward_map:

k2 = forward_map[k1]

del forward_map[k1]

del backward_map[k2]

One of the key issues behind this strategy is that the locks must be acquired sequentially, which is a problem if you need to acquire a large number of locks. And in parallel graph algorithms, you may need a lot of locks.

Drinking Philosophers

It turns out there are other solutions to the dining philosopher problem, one of the most interesting ones was by K. Mani Chandy and J. Misra in 1984, published in a paper titled The Drinking Philosophers Problem. One of the most incredible properties of this solution is that it enables philosophers to acquire forks *in parallel*.

Every fork may be clean or dirty.

Initialization: Forks are initially all dirty. Philosophers are numbered (note that this is opposite of the previous solution), and forks are given the philosopher with the lower ID.

When a philosopher wants to eat, he tries to pick up all the forks he can, if he is successful, he eats.

If there is a fork the philosopher cannot pick up because his neighbor is holding it, he asks his neighbor for it.

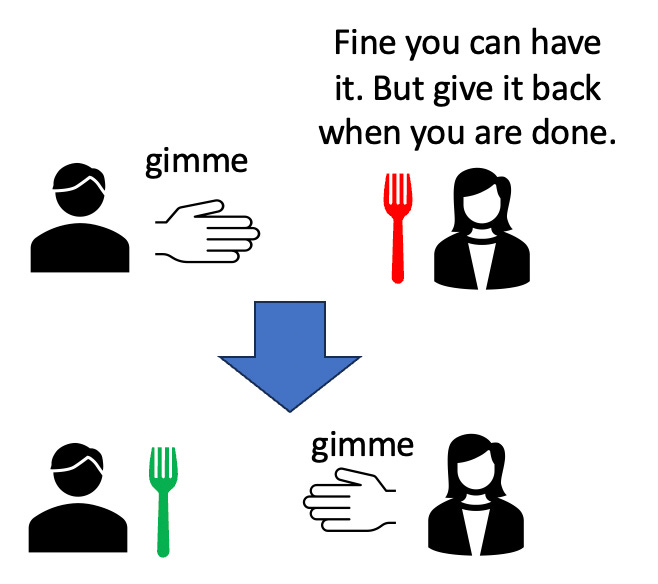

If a philosopher receives a request for a fork which is dirty, he cleans and gives it up. (Anytime a fork change hands it gets cleaned). And then immediately issues a request for the fork.

If a philosopher receives a request for a fork which is clean, he remembers the request but only gives it up when he is done eating.

If at any point a philosopher gets all the forks, he starts eating and when he finishes eating, his forks become dirty. Any pending requests must then fulfilled. Remember that whenever a fork changes hands, it gets cleaned.

And thats it!

Lets takes Philosophers 1 and 2 in the table above as an example.

Philosopher 2 becomes hungry:

Philosopher 2 asks Philosopher 1 for the fork, who gives it up and starts eating.

Philosopher 1 becomes hungry while Philosopher 2 is eating and asks for the fork:

When philosopher 2 finishes eating, the fork becomes dirty. It is cleaned when handing it back to philosopher 1 who can now start eating.

The proof that this is deadlock free, and always guarantees progress is remarkable and it depends on proving that the “precedence graph” (which philosopher has priority) is cycle free.

What does this have to do with drinking philosophers? The paper describes an extension to the dining philosophers problem which allows philosophers to only want a subset of the resources.

As long as you have a setting where resources can only be shared between exactly two processes, this solution works for an arbitrary number of resources (forks), and processes (philosophers) and where a process may not always need all the of the resources. It has the benefit that it allows resources to be acquired in parallel without having to wait sequentially for one resource at a time. We further extended the algorithm in PowerGraph to enable distributed locking over graph edges. I will love to hear about other settings in which this could be applied!

great post! Loved the visuals.